In our previous article, Language Models: Going from Large to Small, we discussed the evolving landscape of language models and their impact on enterprise AI adoption. As organizations increasingly look to deploy these models in production environments, the need for robust infrastructure grows more pertinent. This infrastructure is not just about computational resources but also about effectively managing and leveraging enterprise data in the age of AI.

Recent market movements have highlighted the strategic importance of enterprise data infrastructure. OpenAI's acquisition of Rockset signals its recognition that powerful, comprehensive search and retrieval capabilities are crucial for enterprise customers. However, OpenAI isn't the only enterprise LLM player in town, and this creates opportunities for other search engines and infrastructure providers to fill this massive need.

The Current AI Landscape in Enterprise Data

The adoption of AI in enterprise settings is accelerating rapidly. According to a McKinsey report, 72% of companies surveyed in 2024 report using AI, up from around 50% in previous years. This rapid adoption demonstrates the potential value organizations see in AI-powered solutions.

A significant trend shaping this landscape is the advantageous position of big tech companies deeply integrated with their customers' data infrastructure. Salesforce, for instance, has developed an AI offering called Agentforce that leverages its deep customer integration to provide AI-powered solutions across various business functions.

Key Enterprise AI Challenges

The integration of AI into enterprise environments presents several critical challenges that any comprehensive solution must address:

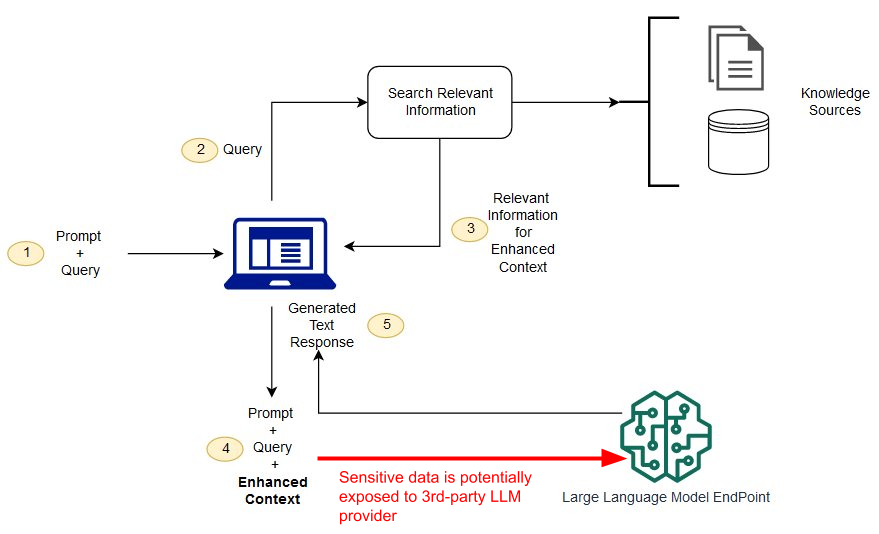

Data Privacy and Security: Organizations need multi-layered defense strategies to protect sensitive company data. This includes data sanitizers that understand the nuances of sensitive information, acting as an AI shield and firewall to prevent data from leaving host enclaves.

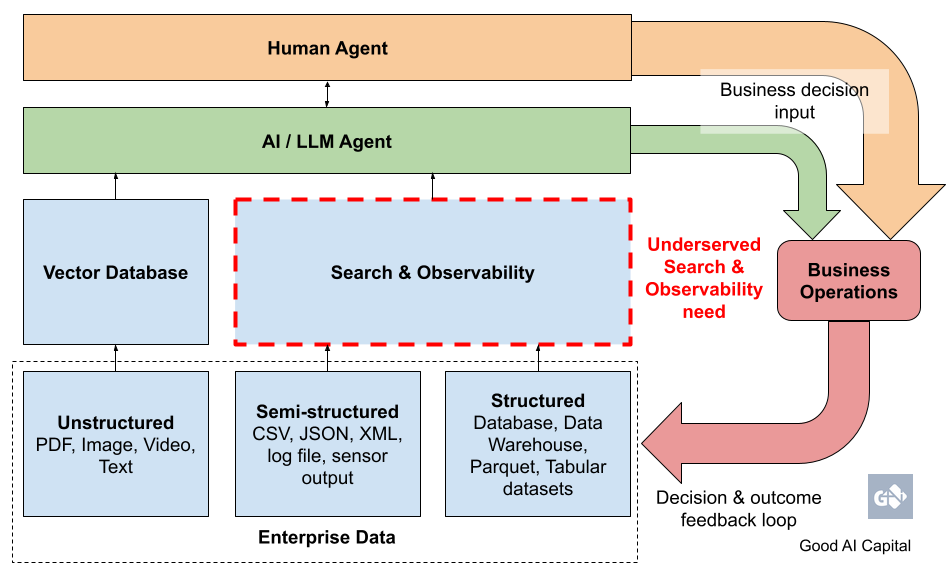

Search and Retrieval Infrastructure: There is an increasing need in search, observability, and retrieval for enterprise data. As AI agents gain full contextual awareness of business operations, these capabilities will become increasingly critical for automated decision-making. We can categorize search exercises into two main types:

Keyword search: This type of search returns results based on exact matches, synonyms, or similar words. It excels at retrieving specific sets of records or anomaly events.

Semantic search: Considers the relationships between words, their context, and the intent behind the query. This approach is ideal for handling complex, unstructured queries, and linking multiple inquiries in a way similar to how users perform consecutive Google searches.

AI agents' deductive and decision-making capabilities can be unlocked when augmented with keyword search, query, and analytics across all forms of data. Supplementing qualitative, unstructured data that is semantically similar - i.e., vector databases - to an LLM query in a baseline RAG ( Retrieval Augmented Generation ) architecture is insufficient for enterprise needs.

Observability and Monitoring: Enterprise AI systems require robust observability and monitoring capabilities to maintain health and performance, particularly as AI agents become more integral to business operations. These services will increasingly cater directly to AI agents, moving away from visualization-heavy approaches designed for human analysts.

Palantir's Approach: Setting the Enterprise Standard

Through their Foundry platform, Palantir has built industry-leading playbooks for Fortune 500 companies across various industries to operationalize their proprietary data and apply AI models across their enterprise systems. As Palantir's CEO, Alex Karp, puts it:

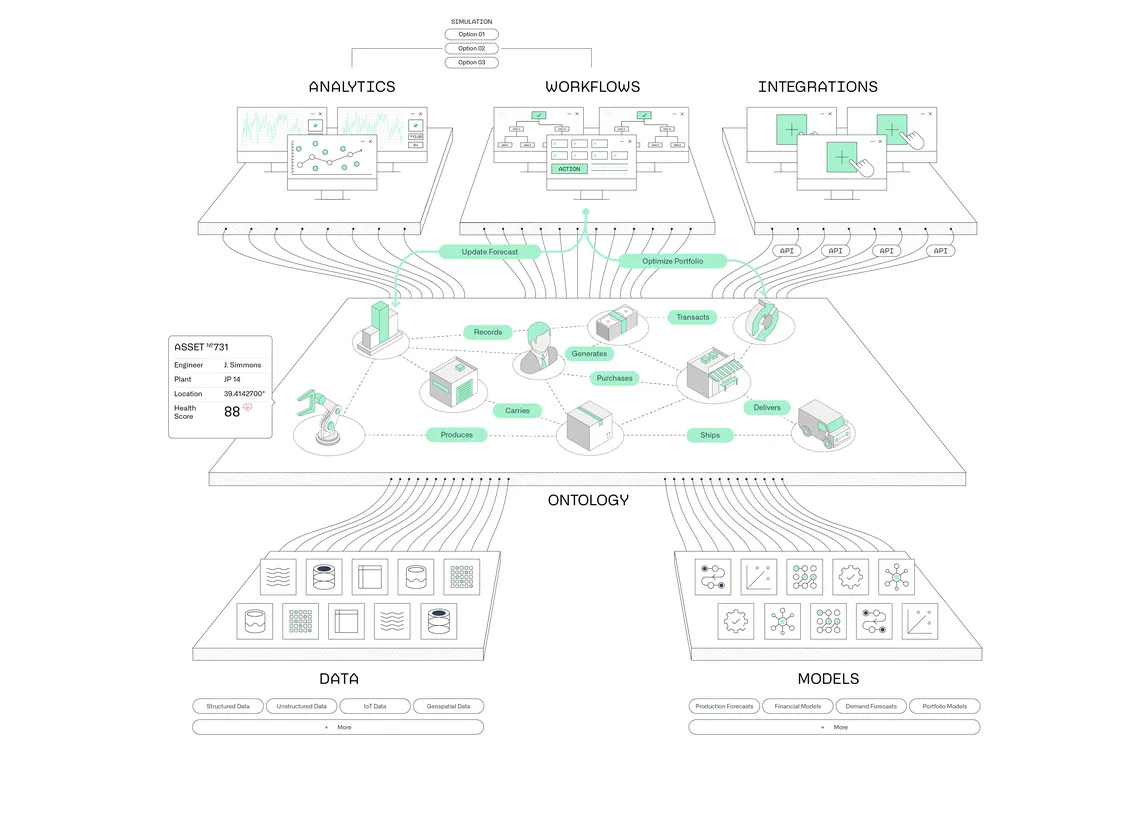

"All of the value in the market will go to chips and what we call the Ontology. The Ontology allows you to take an LLM, use it, refine it, and impose it on your enterprise."

The Ontology: A Comprehensive Data Foundation

An enterprise digital twin is a virtual representation of an organization's entire operational ecosystem, including its processes, systems, and data flows. This digital replica enables businesses to simulate, analyze, and optimize their operations in real time. Palantir's approach centers around creating a business digital twin data foundation, which they call an Ontology. This foundation:

Catalogs all meaningful assets and components of a business into object categories and indexes them for querying

Defines business actions to capture and complete the feedback loop of human and AI-driven decision-making

Provides full contextual awareness of a business's operations

Enables AI agents to be trained to make decisions with continued improvement of the data foundation and LLMs

Underpins the decision-making infrastructure for both business users and AI models

Enables fast, informed decisions on live data powered by low-latency search engines

This comprehensive data foundation serves as the backbone of Palantir’s “Ontology-Powered Operating System for the Modern Enterprise,” providing organizations with a unified platform to understand, analyze, and optimize their operations.

Opportunities for Startups: Scaling Enterprise AI for SMBs

While Palantir dominates the enterprise market by deploying engineers who work closely with customers to build sophisticated Ontologies and workflows, startups have a significant opportunity to serve Small and Medium-sized Businesses (SMBs). As Palantir continues to expand its self-serve capabilities within Foundry, startups can leverage AI agents to streamline implementation and reduce complexity, efficiently bringing similar capabilities to SMB customers who need essential data integration and workflow solutions.

Key Areas of Opportunity

Simplified Data Integration and Management: Startups can develop scalable, user-friendly solutions that help SMBs create their own "Ontologies" or comprehensive data foundations. These solutions should be more accessible and require less technical expertise than enterprise-grade systems. As AI agents become more sophisticated, they will play an increasingly important role in automating data management and decision-making processes. Companies like Coblocks are leading the way in this space, providing end-to-end data workspaces for data teams that manage mission-critical tools and workflows in small and mid-market enterprises.

AI-Powered Security and Privacy: Developing AI-driven security solutions that can protect sensitive data without requiring the extensive resources of a large enterprise. This could include automated data anonymization, AI-powered threat detection, and simplified compliance management tools. Companies like Credal and Guardrails AI AI are pioneering solutions in this space, offering robust protections for enterprise data workflows.

Customizable Search and Retrieval: Creating search and retrieval systems tailored for SMBs that combine both keyword and semantic search capabilities, enabling AI agents to make fast, informed decisions on live data. This approach would enhance the AI Digital Twin concept, allowing for more comprehensive and accurate data retrieval and analysis. This infrastructure is critical to Palantir’s own Ontology platform.

Observability and Monitoring: Developing next-generation observability and monitoring tools for AI applications that cater to the needs and resources of SMBs. These could include AI agents that monitor service health and resilience, providing actionable insights without overwhelming smaller IT teams. Laminar AI is pioneering this space with its open-source platform for engineering LLM products. It offers comprehensive capabilities for tracing, evaluating, annotating, and analyzing LLM data - essentially providing SMBs with enterprise-grade observability tools similar to DataDog but specifically tailored for AI applications.

Vertical-Specific AI Solutions: Creating AI-powered tools and platforms tailored to specific industries or business functions that are popular among SMBs, such as retail, professional services, or local manufacturing. Companies like Upsolve AI are leading this transformation by enabling businesses to build embedded analytics powered by AI agents, providing industry-specific insights and capabilities to their customers while requiring minimal technical expertise.

Scaling AI Success to All

To accelerate development, startups can build on existing AI infrastructure, such as OpenAI's platform. However, the key to success will be in providing better "guardrails" and more comprehensive solutions that address the specific needs of SMBs, ultimately democratizing enterprise AI capabilities:

Simplified integration with standard SMB software and data sources

User-friendly interfaces that don't require extensive technical knowledge

Scalable pricing models that make advanced AI capabilities accessible to smaller businesses

Built-in compliance and security features tailored to SMB regulatory environments

Conclusion

The integration of AI into enterprise data stacks presents both significant challenges and exciting opportunities. While companies like Palantir have paved the way for AI-driven data management in large enterprises, there's a vast untapped market for startups to bring similar capabilities to SMBs. The key to success lies in developing AI agent architectures that can effectively handle the complexities of enterprise data while remaining accessible and scalable for smaller businesses.

At Good AI, we're excited about these technologies' transformative potential and eager to engage with innovators and businesses at the forefront of this revolution. Our industry ties and deep understanding of emerging enterprise AI trends position us uniquely to support startups in this space.

We'd love to connect if you're working on solutions to bring enterprise-grade AI capabilities to SMBs or grappling with these challenges in your organization. The future of enterprise AI is being shaped now, and there's never been a more exciting time to be part of this journey.

Hi Darwin/Charles - great article and very much aligned to what we're working on by embedding AI agents as part of the core database. What's the best way to connect?