Navigating Headwinds: NVIDIA, Export Restrictions, and the Next Frontier of AI

On April 15, 2025, NVIDIA announced it would take a $5.5 billion charge due to new U.S. export controls blocking the shipment of its H20 chips to China. This news sent shockwaves through global tech markets and reignited questions about the future of AI hardware, sovereignty, and supply chains.

What the Export Restriction Really Means

The U.S. Department of Commerce now requires export licenses for any AI chip exceeding certain performance thresholds to be shipped to China. NVIDIA’s H20—explicitly designed to comply with earlier restrictions—has now been swept up in this new rule.

Here’s the key: NVIDIA must apply for a license for each shipment without guaranteeing approval. Until granted, no committed purchase orders can be fulfilled. The burden is on NVIDIA, not the Chinese buyer, to secure the license. This regulatory uncertainty is what triggered the multi-billion-dollar write-down.

H20 vs. H100: What’s the Difference?

The H20 was a dialed-down version of the H100, engineered to fall under the regulatory performance caps. It features lower interconnect bandwidth and compute throughput while maintaining CUDA compatibility and Tensor Core acceleration.

The H100 is a top-tier training and inference GPU used globally by hyperscalers and research labs. In key benchmarks, it delivers up to 6x the performance of the H20. However, both are now restricted from China unless licensed.

The Impact on China’s AI Ambitions

China has heavily relied on NVIDIA hardware to train and deploy large-scale AI models, such as those developed by Baidu, Alibaba, and ByteDance. The new restrictions may slow model iteration and force a pivot to domestic hardware.

Enter Huawei. Its Ascend 910C chip is quickly becoming the top domestic alternative. While not yet matching the H100 in raw performance or ecosystem maturity, it offers a viable inference pathway. Other domestic players, such as Biren and Cambricon, are also likely to benefit.

This could further bifurcate global AI infrastructure into U.S.-aligned vs. China-aligned ecosystems.

Industry Headwinds

NVIDIA is not alone. AMD, also affected by export restrictions, expects a potential impact of up to $800 million related to its MI300 series. Semiconductor equipment makers, such as ASML and Advantest, have also experienced stock declines.

That said, NVIDIA is better positioned than anyone else to adapt and lead.

Why we are Bullish on NVIDIA

Despite short-term headwinds, NVIDIA’s recent GTC keynote made one thing clear: Blackwell is the future.

The Blackwell platform is not just another GPU generation. Blackwell is the backbone of what NVIDIA calls an AI factory—a next-gen data center optimized to transform data into intelligence at scale. These AI factories run 24/7, automating the full loop of training, inference, and decision-making across massive AI workloads.

Its comprehensive architecture is designed to support

Exaflop-scale inference

Transformer Engine v2 with FP4 ( 4-bit floating point ) support

Disaggregated NVLink for massive inter-GPU communication

Grace-Blackwell CPU-GPU integration for shared memory compute

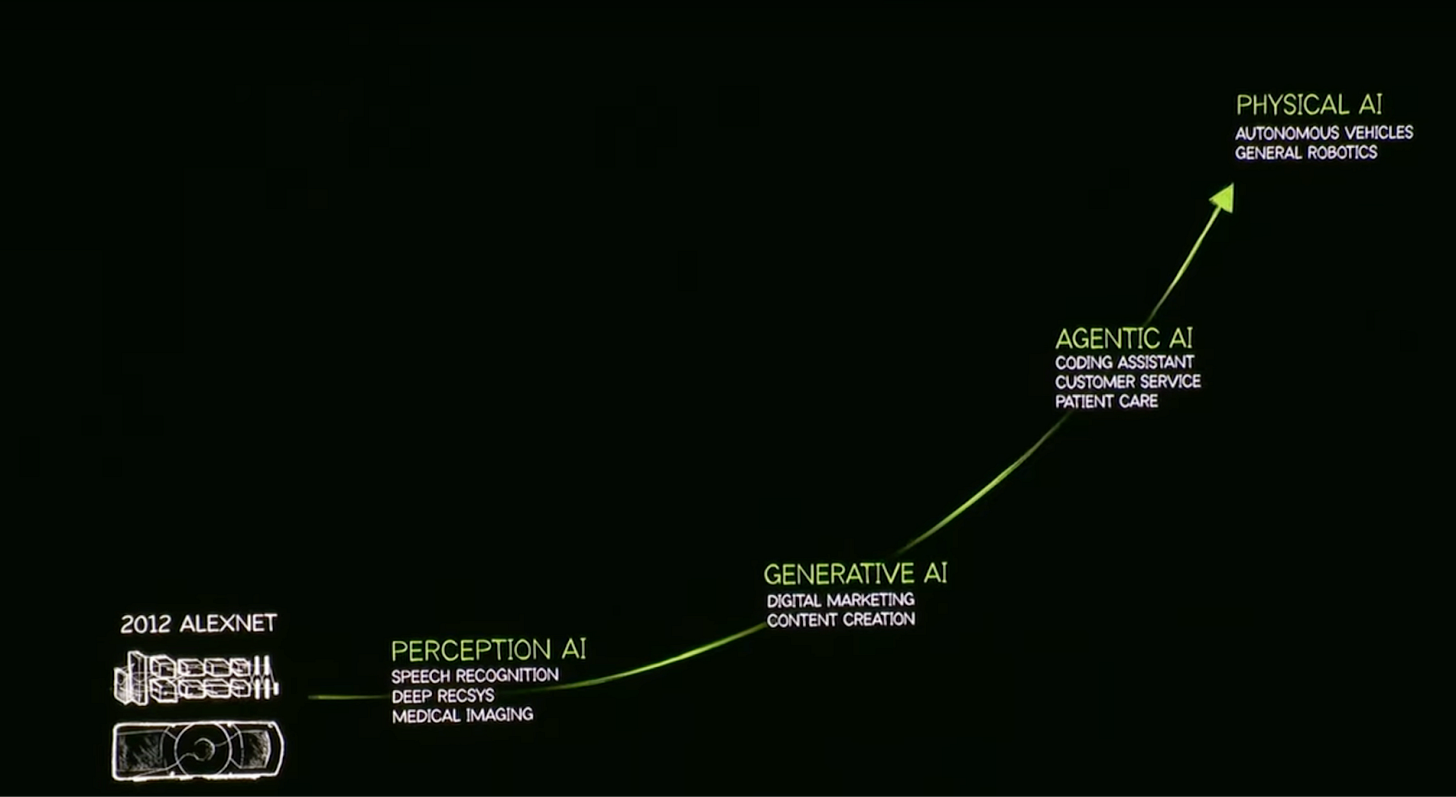

Blackwell is purpose-built for the next evolution of AI: not just Generative AI with large language models but Agentic AI, where an AI can now reason, plan, and act.

The Rise of Reasoning and the Latency-Throughput Tradeoff

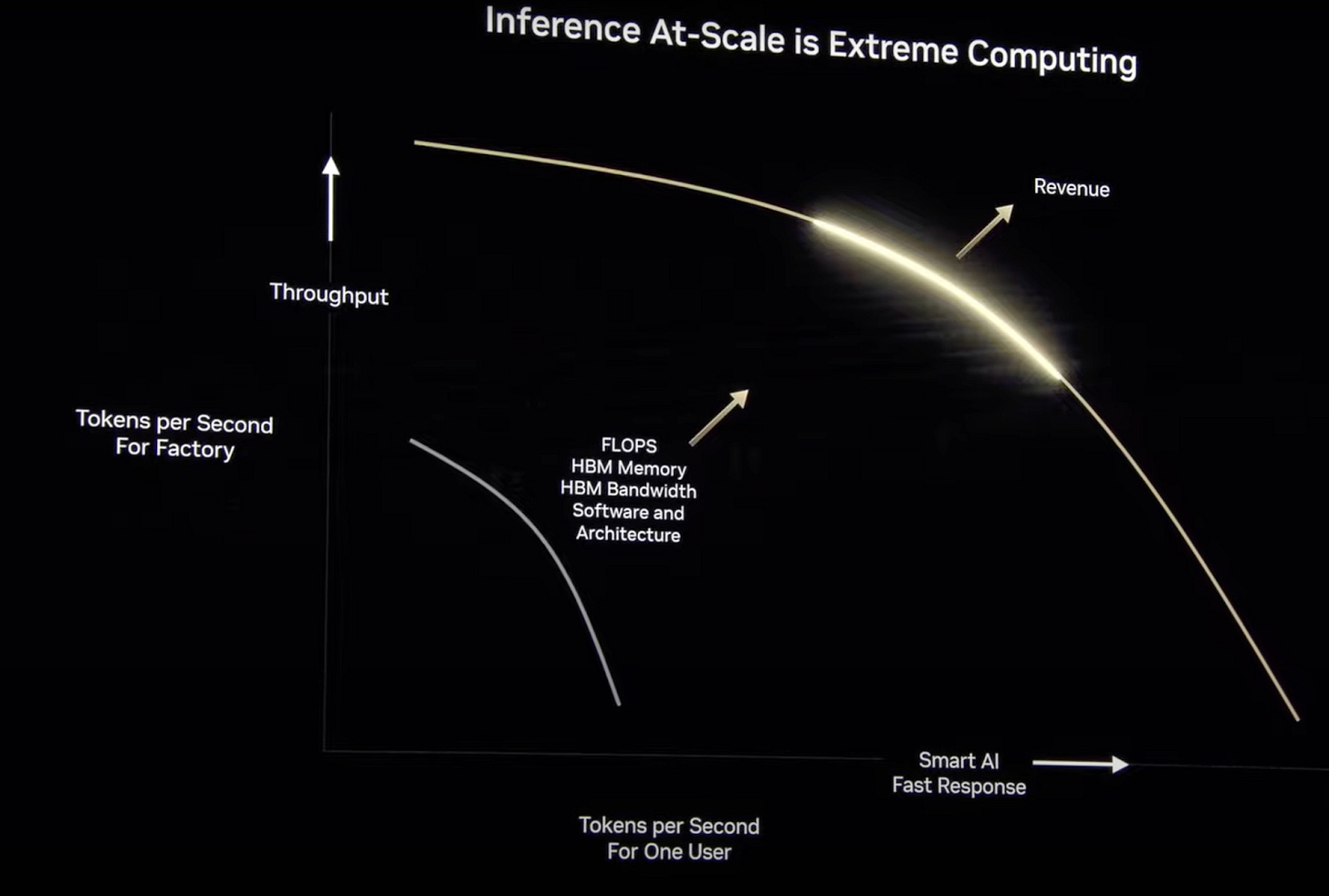

Jensen Huang explained that models are no longer generating single-step outputs—they are now reasoning step-by-step, using chain-of-thought, self-verification, and tool use.

This pushes the compute paradigm beyond raw FLOPs (Floating Point Operations Per Second); it’s about maximizing intelligence per watt, balancing latency (responsiveness) with throughput (depth of thought).

This tradeoff is crucial in the age of agentic AI. For example, Jensen Huang described how batching in AI systems—similar to how factory lines group tasks to increase manufacturing efficiency—can dramatically boost throughput. As real-time interactions, such as chatbots, require fast, low-latency token responses, a tension exists between throughput and latency. Blackwell helps balance this by enabling high-throughput, low-power token generation—critical for agentic systems that must think deeply but respond quickly.

A Quick Overview: What Do “Self-Verification” and “Tool Use” Mean?

Self-verification refers to an AI model’s ability to check its own work before returning an answer. This might include rerunning logic, detecting contradictions, or self-correcting errors. For example, a math model might compute an answer and then verify it with a second method.

Tool use means the model can call external APIs, run code, or fetch real-time data from systems outside the model. This enables it to move beyond memorized knowledge and interact with dynamic, real-world environments—for example, by using a calendar app, a search engine, or a database API.

These are foundational capabilities for agentic systems that plan, act, reflect, and adapt.

Blackwell nails this sweet spot.

Agentic AI and Physical AI: The New Frontiers

We’re entering an era where AI doesn’t just reply—it acts.

Agentic AI systems can:

Plan

Use tools

Adapt their behavior

Interact with real or simulated environments

Example: Imagine a medical AI assistant:

It receives a patient’s health record

Searches PubMed and clinical databases

Generates hypotheses about potential conditions

Uses a drug interaction API to propose treatments

Simulates side effects using a digital twin of the patient

And finally, delivers a decision tree to the physician, all in real time

This is more than a chatbot. It’s a system that autonomously reasons, coordinates multiple tools, and adapts to feedback.

With Omniverse and Isaac platforms, NVIDIA’s Blackwell will enable physical AI—from robotic arms to autonomous vehicles to AI-native digital twins.

Example: Take a warehouse robot, as highlighted in our blog on launching Groot. Rather than following hardcoded paths, this robot uses agentic intelligence to:

Map the warehouse autonomously

Learn optimal paths over time based on changing layouts

Coordinate with other robots to avoid collisions

Adapt tasks in real time depending on inventory changes

Optimize energy usage while completing pick-and-pack tasks

With Blackwell’s memory-sharing capabilities, compute density, and low-latency GPU interconnects, these robots can not only perceive their world but also reason within it.

Beyond China

The global appetite for AI is more significant than any single market. Blackwell is already being adopted across:

AWS, Azure, Oracle

Sovereign AI initiatives in UAE, France, India

Industrial and enterprise AI workloads

As China doubles down on local innovation, the rest of the world is accelerating AI infrastructure powered by NVIDIA.

Final Thought: Necessity Is the Mother of Invention

Our recent blog post on DeepSeek’s breakthroughs concluded with this phrase, which also applies here.

Yes, NVIDIA faces geopolitical headwinds. Yes, billions in revenue are on pause.

But necessity fuels innovation. Blackwell is NVIDIA’s invention for this moment—and it may define the next decade of AI.