Superintelligence Needs Superpower: Why AI’s Next Bottleneck Is Electricity

Meta Unveils Prometheus and Hyperion: The First Gigawatt AI Superclusters

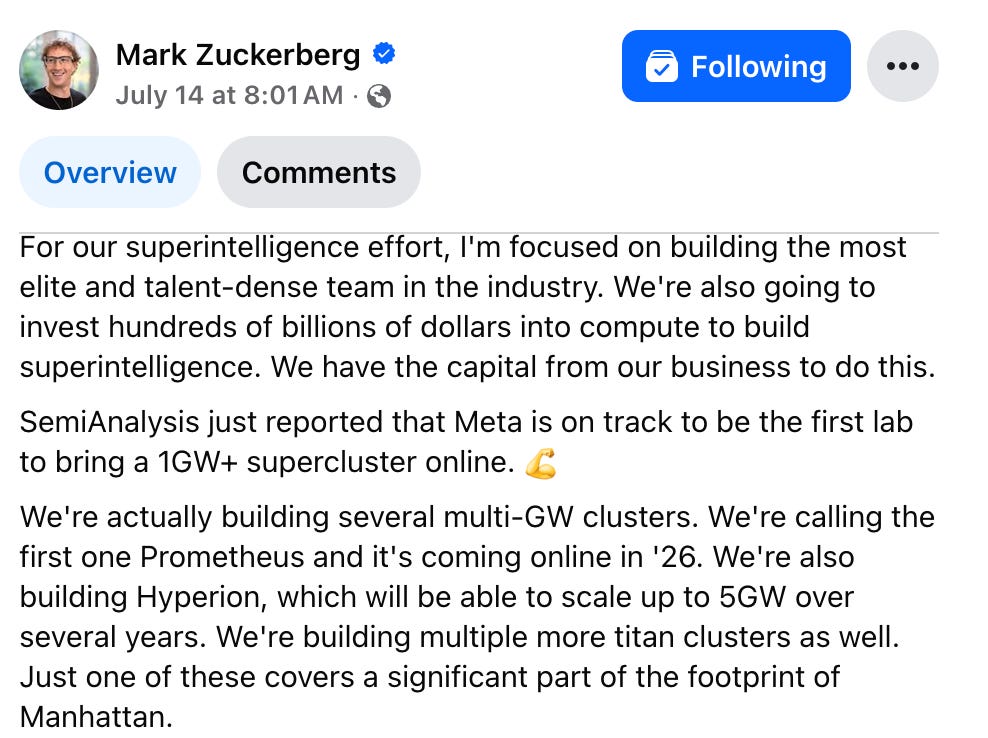

Following its $14.3 billion investment into talent and infrastructure through Scale AI—a move that signaled Meta’s full-throttle commitment to AI superintelligence—the company has unveiled two of the most significant AI data center projects in history: Prometheus and Hyperion.

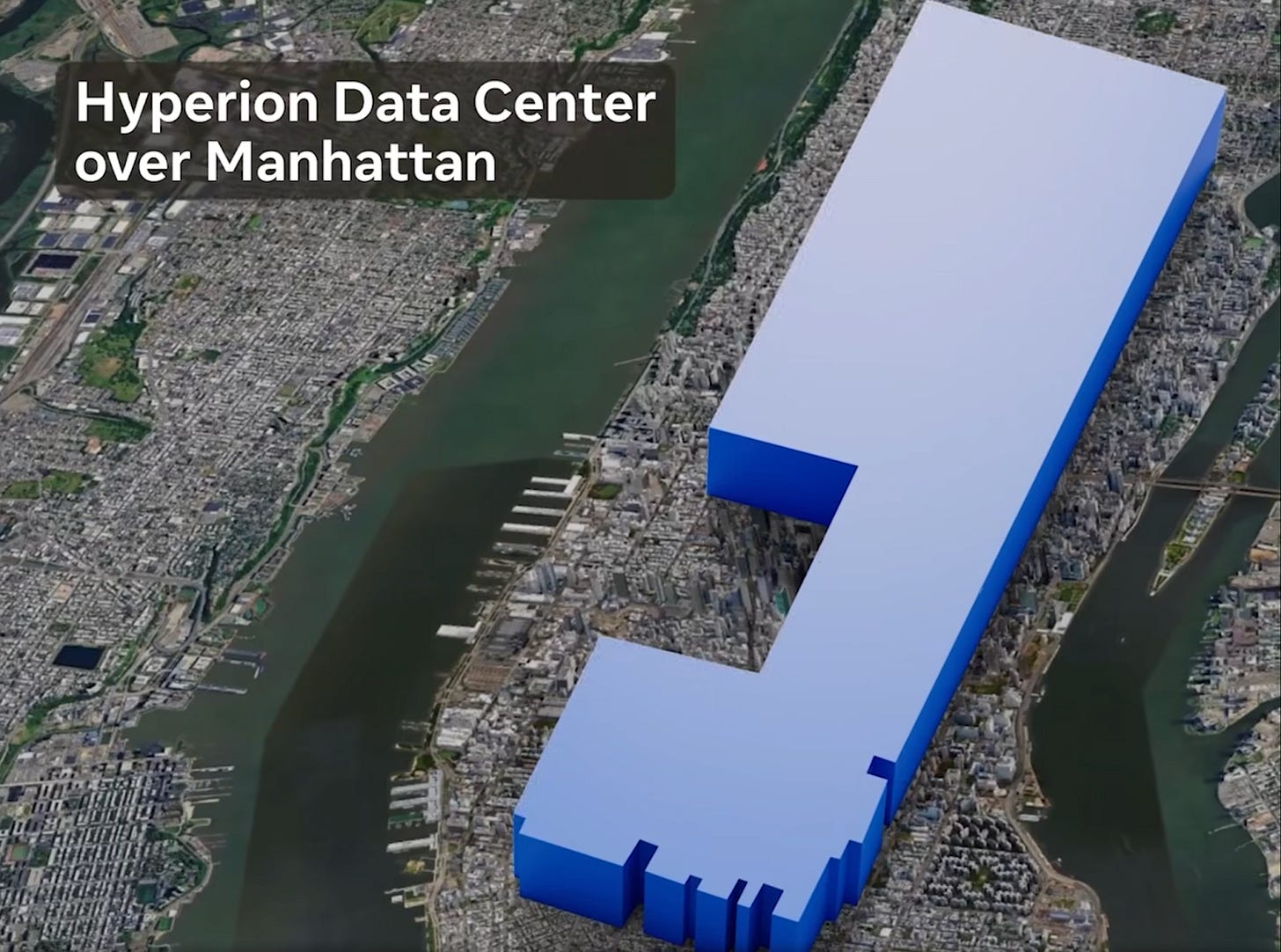

Prometheus, the first of Meta’s so-called “titan clusters,” is slated to come online in 2026. Designed to deliver over 1 gigawatt (GW) of power—roughly equivalent to the energy consumption of a small U.S. state ( Rhode Island, Vermont, e.g. ) —it marks the first time a data center project has reached into gigawatt-scale territory. Hyperion, a second installation in development, is expected to scale up to 5 GW over time.

In a dramatic reveal, Mark Zuckerberg shared a visual of the data center’s footprint superimposed over Manhattan, highlighting the massive scale of the undertaking. The image served as a clear statement: Meta’s infrastructure ambitions now rival those of national utilities.

Meta says it is building several of these multi-GW clusters as part of a long-term strategy to lead in “superintelligence”—a next-generation leap in AI capabilities. The Prometheus and Hyperion clusters will house tens of thousands of AI chips, including the custom Meta Training and Inference Accelerator (MTIA) and GPUs from major vendors. These compute resources are critical to supporting large-scale training runs for frontier models.

With these announcements, Meta has repositioned itself from a social media company dabbling in AI to one of the industry’s most formidable infrastructure players.

The Age of Compute: Why Electricity is the New Bottleneck

The AI arms race is no longer just about chips. It’s about power — in the literal sense.

In a CNBC interview, Zuckerberg said, "Chips are not the bottleneck anymore. Electricity is." The demand for high-performance GPUs, like Nvidia’s H100s and Blackwell chips, is being outpaced by the availability of power to run them. AI compute is power-hungry, and current infrastructure wasn’t built to handle workloads requiring gigawatts of constant, uninterrupted energy.

AI is a learning machine. And in network-effect businesses, when the learning machine learns faster, everything accelerates. It accelerates to its natural limit. The natural limit is electricity. Not chips — electricity really.

Eric Schmidt (former CEO of Google) on electricity being the bottleneck for AI development.

Meta’s data center in Arizona is pushing up against the limitations of the regional grid, requiring multi-layered strategies involving grid interconnection, power purchase agreements, and clean energy sourcing.

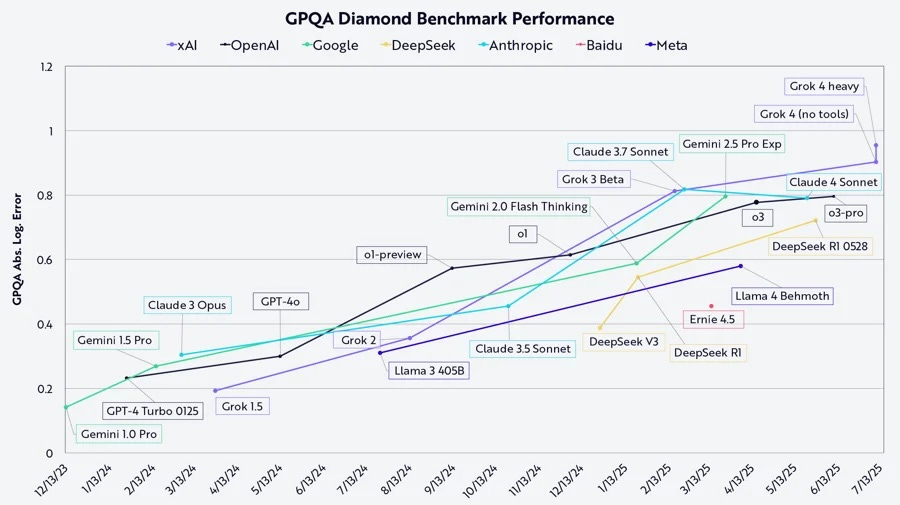

With Grok 4, xAI Retakes the Large Language Model Lead

While Meta is racing to solve the infrastructure challenge, Elon Musk’s xAI has already delivered results—driven by bold investment in compute and infrastructure. Grok 4 stands as a proof point of what’s possible when these pieces come together.

Grok 4 has emerged as a breakout model in the AI space, notably outperforming peers on challenging benchmarks like Humanity’s Last Exam. A key reason for its success lies in its tool-augmented training, a method that leverages external tools during the training process to enhance reasoning capabilities. This approach is extremely compute-intensive, requiring massive parallel GPU clusters and consistent power.

According to ARK Invest’s recent benchmark report, Grok 4 achieved a 38.6% score on Humanity’s Last Exam with tool use, significantly outperforming competitors. That result was due in large part to Grok's training regime, which demanded massive compute resources that could only be sustained with a robust and scalable energy infrastructure.

Colossus: Powering Superintelligence with Custom Infrastructure

To support this level of infrastructure, Elon Musk’s xAI designed Colossus, a flagship data center reported to host over 100,000 GPUs and believed to draw up to 1.4 GW of power. The facility operates with a hybrid energy strategy that includes on-site natural gas turbines and solar energy. This configuration gives Colossus flexibility and independence from grid bottlenecks, enabling uninterrupted, large-scale compute needed for Grok 4.

While solar installations help supplement the energy mix, natural gas remains the primary source for consistent, around-the-clock power. This strategy, while effective in delivering rapid compute, has drawn criticism from environmental advocates and local communities.

In Memphis, where part of Colossus' infrastructure and support operations are located, residents have raised concerns over environmental impact, air emissions, high water usage, and the lack of transparency in project development. Critics argue that while the project fuels technological progress, it may come at the cost of local environmental degradation and limited community engagement.

Meta’s Diverse Energy Playbook: Beyond Just Nuclear

Unlike xAI’s reliance on self-built fossil fuel infrastructure, Meta is taking a broader, more diversified approach to power. While it has indeed partnered with Constellation to secure 24/7 carbon-free electricity—including power from nuclear sources through long-term contracts—it is not solely betting on nuclear.

Meta is also developing its own gas-turbine–based power generation capabilities, including a project in Ohio. These on-site facilities allow Meta to maintain reliable power delivery while complementing its renewable and nuclear strategies. This dual-path strategy signals that Meta understands the urgency of power constraints and is willing to invest in both near-term reliability and long-term sustainability.

Small Modular Reactors: A Future Energy Option

Meta, like several tech giants, has also shown interest in Small Modular Reactors (SMRs) as a potential long-term energy source. These next-gen nuclear reactors promise safer, scalable, and more flexible deployment than traditional nuclear power plants. However, most SMR solutions won’t be ready until after 2030 due to regulatory and technological hurdles.

Sidebar: What Are Small Modular Reactors (SMRs)?

Small Modular Reactors (SMRs) are an emerging class of nuclear power technology designed to be compact, efficient, and safer than traditional large-scale reactors. Unlike conventional nuclear plants that often produce over 1,000 megawatts (MW) and require over a decade to build, SMRs typically generate between 50–300 MW and can be manufactured off-site and deployed in modular units. This makes them faster to construct—potentially within 5 to 7 years—and more adaptable to diverse energy demands.

SMRs are engineered with passive safety features, meaning they can cool down and shut off without human intervention or external power, reducing the risk of catastrophic failure. Their smaller footprint and modular design also allow them to be sited closer to industrial facilities or AI data centers—offering the possibility of local, consistent, and carbon-free power.

For companies like Meta and others seeking scalable energy for supercomputing clusters, SMRs represent a long-term potential solution to the electricity bottleneck. However, as of today, no SMR is operational at commercial scale in the U.S., and most deployment projections suggest readiness no earlier than the early 2030s.

The Coming Storm: Can the Grid Keep Up?

Meta's bold vision raises pressing questions. With AI data centers projected to consume 8% of U.S. electricity by 2030, the race to build intelligence is also a race to avoid grid overload.

While Meta relies on regulated utilities and long-term agreements, Colossus shows the advantage of owning power generation — even if it means higher emissions. But these models aren't mutually exclusive. Meta’s recent clean energy strategies, paired with its capital firepower, suggest it could eventually build its own clean plants as SMR tech matures.

Meanwhile, communities around these mega-clusters are growing increasingly aware of their local impacts: water use, emissions (for fossil-fueled clusters), and competition for grid access. The future of superintelligence may hinge not just on breakthroughs in AI — but in energy policy, infrastructure, and innovation.

Meta’s journey to build the most powerful AI infrastructure on Earth is as much about electrons as it is algorithms. In Zuckerberg’s own words, the next frontier of AI won’t be won by chips alone. It will be powered by the watts behind them.